As the automotive industry shifts toward electrification, the performance and safety of electric vehicle (EV) battery packs under diverse environmental conditions have become critical research areas. The thermal management system (TMS) of an EV battery pack plays a pivotal role in maintaining optimal operating temperatures, which directly impacts battery life, efficiency, and safety. In particular, extreme conditions—such as scorching summers where ambient temperatures can exceed 45°C or frigid winters where temperatures plummet to -20°C—pose significant challenges to the thermal adaptability of EV battery packs. This study focuses on evaluating the thermal behavior of a liquid-cooled EV battery pack under such extreme scenarios using a combined approach of numerical simulation and experimental validation. By refining simulation parameters based on room-temperature tests, we ensure high fidelity in predicting thermal responses. The goal is to assess whether the EV battery pack can keep cells within safe operating ranges (typically 0°C to 45°C for lithium iron phosphate batteries) under harsh environments, thereby informing design improvements for broader climate adaptability.

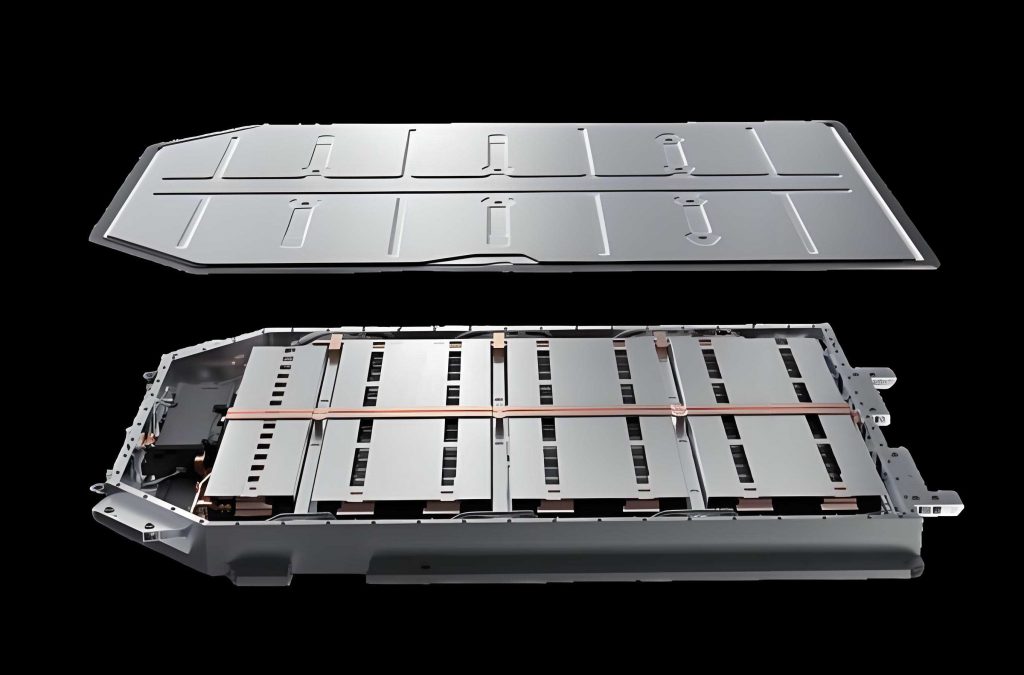

The EV battery pack under investigation consists of 48 prismatic lithium-ion cells arranged in a 4×12 configuration, each with a capacity of 280 Ah. The cooling system employs a bottom liquid-cooling plate with a 50% ethylene glycol-water mixture as the coolant. The cooling plate is coated with an insulating layer and bonded to the cells using thermally conductive adhesive. Busbars and copper connectors are integrated for electrical connectivity, and the pack is designed for a 0.5C charge-discharge rate. To simulate real-world operating conditions, the coolant flow rate is set at 5 L/min with an inlet temperature of 20°C, while ambient air convection is considered with heat transfer coefficients ranging from 2 to 5 W/(m²·K). The primary heat sources include cell joule heating during discharge, as well as resistive heating from busbars and connectors. For this study, we define extreme conditions as high-temperature environments at 45°C and low-temperature environments at -20°C, representing worst-case scenarios in regions like deserts or northern climates.

To analyze the thermal performance of the EV battery pack, we developed a detailed 3D computational fluid dynamics (CFD) model using numerical simulation software. The model simplifies non-essential components such as wiring harnesses and rounds off minor geometric features to reduce computational complexity while retaining thermal accuracy. Key materials in the EV battery pack, including cells, busbars, cooling plates, and thermal interfaces, are assigned property values based on standard datasheets and empirical measurements. The thermal parameters are summarized in Table 1, which provides thermal conductivity, density, and specific heat capacity for each component. These parameters are crucial for solving the governing heat transfer equations, such as the Fourier’s law for conduction:

$$q = -k \nabla T$$

where \( q \) is the heat flux, \( k \) is the thermal conductivity, and \( \nabla T \) is the temperature gradient. For convective cooling on the liquid-cooled plate, we apply the Newton’s law of cooling:

$$q = h (T_s – T_f)$$

where \( h \) is the convective heat transfer coefficient, \( T_s \) is the surface temperature, and \( T_f \) is the coolant temperature. The heat generation within the EV battery pack is modeled based on experimental data. During the final half-hour of discharge at 0.5C, each cell produces approximately 12 W of heat, while busbars and connectors generate additional heat due to electrical resistance, calculated via Joule heating:

$$P = I^2 R$$

where \( P \) is the power dissipation, \( I \) is the current (140 A for busbars), and \( R \) is the resistance. The simulation solves the steady-state energy equation to obtain temperature distributions across the EV battery pack.

| Component | Thermal Conductivity (W/(m·K)) | Density (kg/m³) | Specific Heat Capacity (J/(kg·K)) |

|---|---|---|---|

| Busbar (Aluminum) | 237 | 2702 | 903 |

| Cell (Anisotropic) | 20 (in-plane), 1.2 (through-plane) | 2800 | 1000 |

| Liquid Cooling Plate | 193 | 2800 | 880 |

| Coolant (50% EG-Water) | 0.384 | 1071.11 | 3300 |

| Thermal Adhesive | 1.2 | 1900 | 270 |

| Insulation Layer | 0.1 | 900 | 1000 |

| PC Board | 0.3 | 1200 | 1100 |

| Epoxy Resin | 0.2 | 2000 | 1.1 |

| Foam Padding | 0.05 | 10 | 1.3 |

To validate the simulation model, we conducted thermal tests on the EV battery pack in a controlled laboratory environment at 25°C ambient temperature. The pack was instrumented with 16 temperature sensors placed on busbar surfaces, distributed evenly across the pack to capture spatial variations. A thermal chamber maintained stable conditions, and a chiller supplied coolant at 20°C. The EV battery pack underwent a full charge-discharge cycle at 0.5C, with temperature data logged by the battery management system (BMS). The experimental results from the discharge phase were compared against simulation predictions, focusing on steady-state temperatures during the final 30 minutes. This comparison allowed us to calibrate uncertain parameters, such as contact resistances and interfacial thermal resistances, ensuring that simulation errors at measurement points were within 1°C. The calibrated model was then used to extrapolate thermal behavior to extreme conditions without additional costly experiments.

The room-temperature simulation results for the EV battery pack indicate a maximum busbar surface temperature of 35.6°C and a minimum of 31.5°C, with hotter spots near longer copper connectors due to higher resistive heating. The experimental data showed close agreement, as detailed in Table 2, where most simulated temperatures deviate by less than 1°C from measured values. This validation confirms the reliability of our thermal model for the EV battery pack. Subsequently, we applied the same model to extreme scenarios. For the high-temperature case at 45°C ambient, we assumed the cells were in the high-heat-generation state typical of the end of discharge (12 W per cell). For the low-temperature case at -20°C, we considered the cells in a static, non-heating state to simulate cold soak conditions. The simulation outcomes for cell temperatures are summarized in Table 3, revealing that even under these extremes, the EV battery pack maintains cells within the recommended operating range.

| Sensor Point | Experimental Temperature (°C) | Simulated Temperature (°C) | Error (°C) |

|---|---|---|---|

| T1 | 33.17 | 32.01 | +1.16 |

| T2 | 32.64 | 33.78 | -1.14 |

| T3 | 32.96 | 33.74 | -0.78 |

| T4 | 34.76 | 35.64 | -0.88 |

| T5 | 34.70 | 35.62 | -0.92 |

| T6 | 32.79 | 33.89 | -1.10 |

| T7 | 32.93 | 33.79 | -0.86 |

| T8 | 33.96 | 34.43 | -0.47 |

| T9 | 33.61 | 34.50 | -0.89 |

| T10 | 33.65 | 34.18 | -0.53 |

| T11 | 33.06 | 34.04 | -0.98 |

| T12 | 34.64 | 35.61 | -0.97 |

| T13 | 34.35 | 35.59 | -1.24 |

| T14 | 32.97 | 34.14 | -1.17 |

| T15 | 33.36 | 34.20 | -0.84 |

| T16 | 32.25 | 31.53 | +0.72 |

Under high-temperature conditions (45°C ambient), the simulation predicts a maximum cell temperature of 41.2°C, a minimum of 35.7°C, and an average of 39.2°C. This suggests that the EV battery pack’s liquid cooling system is effective in mitigating heat buildup, keeping cells below the 45°C upper safety limit. However, the proximity to the threshold indicates that prolonged exposure or higher heat generation rates (e.g., during fast charging) could push temperatures into risky zones. The temperature distribution within the EV battery pack is non-uniform, with hotter regions near the center and cooler areas at the periphery due to coolant flow paths. This gradient can be quantified using the temperature difference metric:

$$\Delta T = T_{\text{max}} – T_{\text{min}}$$

which yields 5.5°C for the high-temperature case. Minimizing \(\Delta T\) is crucial for cell balancing and longevity in an EV battery pack.

In contrast, the low-temperature simulation (-20°C ambient) shows cell temperatures ranging from 3.7°C to 11.2°C, with an average of 7.8°C. Since the cells are not actively generating heat, the cooling system effectively warms the pack above the ambient, preventing sub-zero conditions that could lead to lithium plating or capacity loss. The thermal inertia of the EV battery pack components, along with heat leakage from the coolant, contributes to this mild warming. The temperature rise can be approximated by solving the transient heat equation:

$$\rho c_p \frac{\partial T}{\partial t} = \nabla \cdot (k \nabla T) + \dot{q}$$

where \(\rho\) is density, \(c_p\) is specific heat, \(t\) is time, and \(\dot{q}\) is heat generation rate (zero in this case). The steady-state solution indicates that the EV battery pack acts as a thermal buffer, maintaining cells above 0°C even in extreme cold.

| Environmental Condition | Maximum Cell Temperature (°C) | Minimum Cell Temperature (°C) | Average Cell Temperature (°C) |

|---|---|---|---|

| High-Temperature (45°C) | 41.2 | 35.7 | 39.2 |

| Low-Temperature (-20°C) | 11.2 | 3.7 | 7.8 |

The thermal adaptability of the EV battery pack is further analyzed by examining key performance indicators such as thermal uniformity and cooling efficiency. For the high-temperature scenario, the cooling efficiency \(\eta\) can be defined as the ratio of heat removed by the coolant to the total heat generated:

$$\eta = \frac{\dot{m} c_p (T_{\text{out}} – T_{\text{in}})}{P_{\text{total}}}$$

where \(\dot{m}\) is the coolant mass flow rate, \(c_p\) is coolant specific heat, \(T_{\text{out}}\) and \(T_{\text{in}}\) are outlet and inlet temperatures, and \(P_{\text{total}}\) is the total heat dissipation from the EV battery pack. Assuming typical values, \(\eta\) exceeds 90%, demonstrating the effectiveness of the liquid cooling system. However, under low-temperature conditions, the focus shifts to heating strategies. Passive thermal management via insulation may suffice for short exposures, but active heating using the coolant or integrated heaters might be necessary for longer cold soaks to ensure the EV battery pack remains within optimal ranges.

To enhance the thermal adaptability of EV battery packs, several design optimizations can be considered. First, the cooling plate geometry could be redesigned to improve flow distribution and reduce temperature gradients. For instance, implementing serpentine or parallel channels can lower \(\Delta T\). Second, advanced thermal interface materials with higher conductivity could decrease interfacial resistances between cells and the cooling plate. Third, adaptive thermal management strategies, such as variable coolant flow rates or temperature setpoints based on ambient conditions, could dynamically optimize performance. Fourth, incorporating phase change materials (PCMs) around cells could buffer thermal spikes in high-temperature environments or provide latent heat in cold starts. These improvements would make the EV battery pack more robust across a wider climate spectrum.

From a safety perspective, the EV battery pack must avoid thermal runaway, which can be triggered by excessive temperatures or internal shorts. The simulation results indicate that under extreme heat, cells stay below critical thresholds, but margins are slim. Therefore, additional safeguards like thermal fuses, venting mechanisms, and enhanced BMS monitoring are recommended. Moreover, the thermal model can be extended to simulate fault conditions, such as coolant pump failure or cell imbalance, to assess failure modes and design redundancies. Such analyses are vital for ensuring the reliability of EV battery packs in real-world applications.

The economic implications of thermal management in EV battery packs are also significant. Efficient cooling reduces energy consumption for auxiliary systems, extending driving range. Conversely, inadequate heating in cold climates can degrade battery capacity and increase charging times, affecting user experience. By optimizing thermal systems, manufacturers can lower lifecycle costs and improve sustainability. Our study provides a framework for evaluating these trade-offs using simulation, which is cost-effective compared to extensive environmental testing.

In conclusion, this study demonstrates the thermal adaptability of a liquid-cooled EV battery pack under extreme conditions through validated numerical simulations. The EV battery pack maintains cell temperatures within safe operating limits at both 45°C and -20°C ambient temperatures, showcasing the efficacy of the current design. However, the narrow margins in high-temperature scenarios suggest room for improvement. Future work should focus on experimental validation under extreme climates, multi-physics modeling including electrochemical effects, and integration of smart thermal controls. The methodologies presented here offer a comprehensive approach to assess and enhance the thermal performance of EV battery packs, contributing to safer and more durable electric vehicles. As the EV market expands globally, ensuring battery pack resilience across diverse environments will be paramount for widespread adoption and customer satisfaction.

To further illustrate the thermal dynamics, consider the overall heat balance equation for the EV battery pack:

$$Q_{\text{gen}} = Q_{\text{cool}} + Q_{\text{loss}}$$

where \(Q_{\text{gen}}\) is the heat generated by cells and conductors, \(Q_{\text{cool}}\) is the heat removed by the liquid cooling system, and \(Q_{\text{loss}}\) is the heat lost to the environment via convection and radiation. In extreme cold, \(Q_{\text{gen}}\) may be negligible, so \(Q_{\text{loss}}\) dominates, leading to the temperature drops observed. Proper insulation can reduce \(Q_{\text{loss}}\), as seen in the low-temperature simulation where the EV battery pack retains heat. This principle is crucial for designing EV battery packs for Arctic or high-altitude regions.

Another aspect is the thermal coupling between cells in the EV battery pack. Due to their close packing, heat transfer between adjacent cells can be modeled using thermal networks. The equivalent thermal resistance \(R_{\text{th}}\) between cells depends on the interfacial materials and air gaps. Minimizing \(R_{\text{th}}\) improves thermal uniformity, which is beneficial for cell aging consistency. For the EV battery pack studied, the thermal resistance matrix can be derived from simulation data to identify hotspots and guide layout modifications.

Finally, the scalability of this approach should be noted. The same simulation methodology can be applied to larger EV battery packs or full battery modules in electric vehicles. By adjusting boundary conditions and component properties, designers can predict thermal behavior under various driving cycles and environmental stresses. This proactive analysis reduces development time and costs while ensuring that EV battery packs meet stringent safety standards. As battery technology evolves, continuous refinement of thermal models will be essential to keep pace with higher energy densities and faster charging requirements.